Why scientists want AI regulated now before it's too late

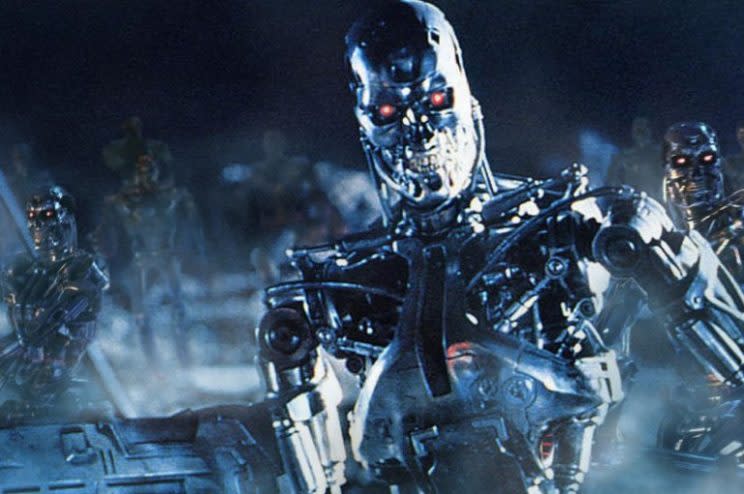

Elon Musk, CEO of Tesla (TSLA) and Space X, recently sounded the alarm that murderous artificial intelligence-powered robots could one day rampage through American neighborhoods. And the only way to stop them, he said, is to begin regulating AI before it destroys us all.

Musk’s warnings that AI poses an “existential threat” may have been a bit dramatic, but he’s not the only expert hoping for some kind of government regulation of AI. And as companies from Apple (AAPL) and Amazon (AMZN) to Facebook (FB) and Google (GOOG, GOOGL) continue pouring money into the field, those regulations may be needed sooner than later.

AI on the market

Carnegie Mellon’s Manuela Veloso, an expert on AI, doesn’t believe we’re even close to the point where an army of T-1000s will march down Broadway and demand our fealty.

But we should have regulations of any AI-created products that reach the mass market to ensure the safety of consumers, according to Veloso, department head of the machine learning department at Carnegie Mellon’s School of Computer Science.

“I believe there should be regulation [of AI] the same way if you and I would create some kind of milk in a factory,” Veloso said, noting that the Food and Drug Administration, for example, would have to approve a “new kind of milk” before it reached the general public.

Veloso, however, draws the line at regulating AI research. Instead, she believes scientists should be able to push the limits of AI as far as they can in the safety of their labs.

“I think the research, before it becomes a product, you can experiment, you can research or anything, otherwise we’ll never advance the discoveries of AI,” she said.

Regulating AI like people

Bain & Company’s Chris Brahm, meanwhile, believes AI should be regulated not just when it serves the mass market, but also when it’s tasked with performing the same jobs we regulate humans — jobs like banking.

“Today, as a society we have clearly decided that certain types of human decision making need to be regulated in order to protect citizens and consumers. Why then would we not, if machines start making those decisions … regulate the decision making in some form or fashion?” he said.

Who regulates the AI?

So researchers and experts agree that there should be regulations put into place. The big question, though, is who will create those rules.

The government doesn’t have a regulatory body dedicated to ensuring that AI is properly vetted, and while it may not be able to stomp around crushing cars, the technology is already beginning to permeate our society from our smartphones to our hospitals.

It doesn’t look like such a body will take shape and begin offering rules anytime soon, either. A House panel only recently began discussing regulations for self-driving cars, and those, in some states, are already on highways and residential streets.

“Generating and enforcing such regulations can be very hard, but we can take it as a challenge,” Veloso said.

So while Musk’s fear that the robot apocalypse is nearly upon us might be farfetched, his concerns over whether the government can implement any kind of regulations in a timely fashion are very real.

More from Dan:

Why it might be ‘dangerous’ for IBM to turn itself around: professor

Microsoft Surface Laptop review: A great notebook with one small flaw

Amazon’s Echo and other smart speakers do much more than you realize

Email Daniel at [email protected]; follow him on Twitter at @DanielHowley.